Federated Learning

Federated Learning enables decentralized training of machine learning models by allowing multiple devices or institutions to collaboratively learn a shared model without sharing their raw data. It is valuable in privacy-sensitive applications, as it preserves data privacy while leveraging distributed datasets for more robust model training.

We focus on topics about Federated Learning Security, Generalization and Robustness, and Federated Graph Learning.

Highlight: Survey and Benchmarks

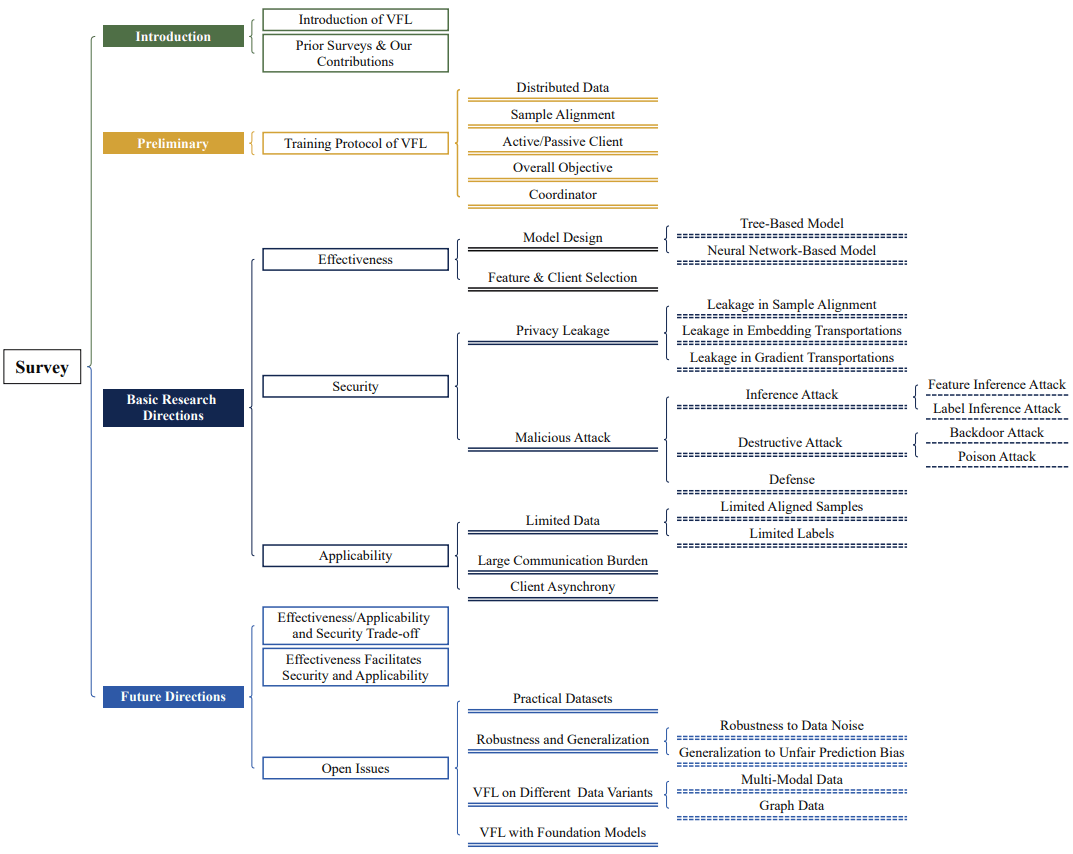

Vertical Federated Learning for Effectiveness, Security,

Applicability: A Survey

ACM Computing Surveys, 2025.

We provide a history and background introduction, along with a summary of the general training protocol of VFL. We then revisit the taxonomy in recent reviews and analyze limitations in-depth. For a comprehensive and structured discussion, we synthesize recent research from three fundamental perspectives: effectiveness, security, and applicability. Finally, we discuss several critical future research directions in VFL, which will facilitate the developments in this field.

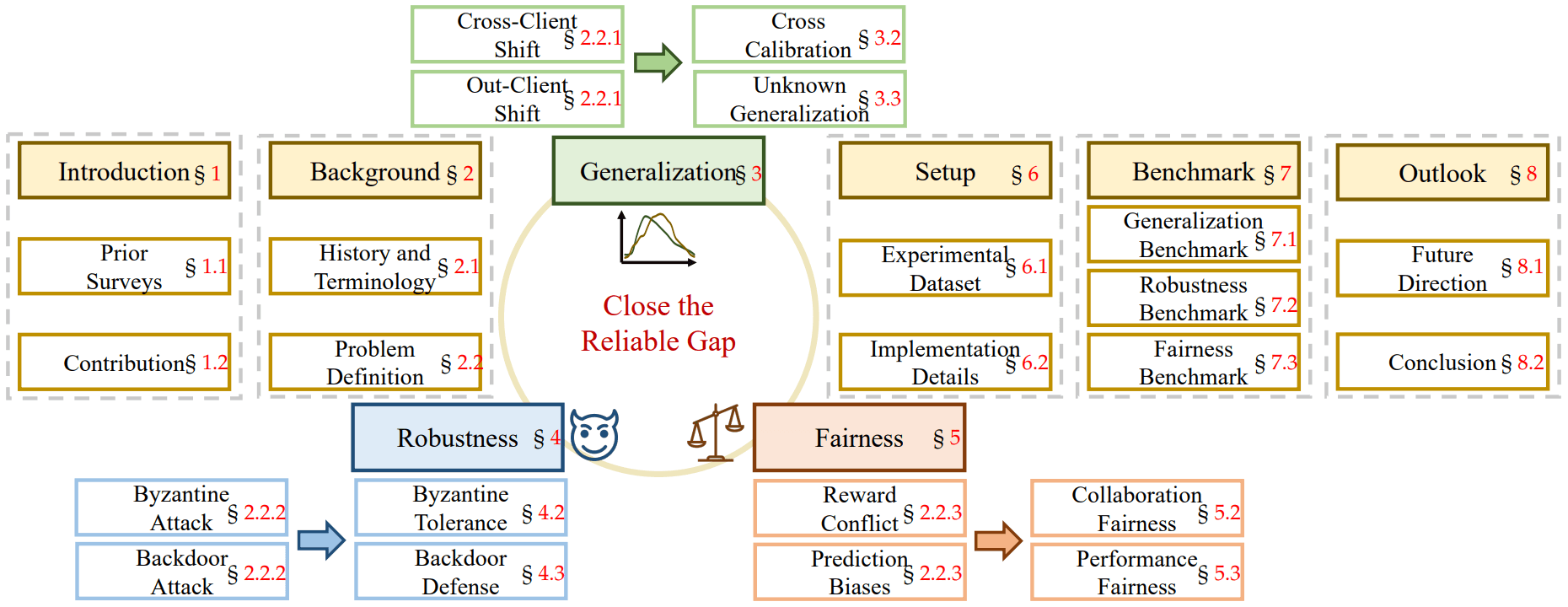

Federated learning for generalization, robustness, fairness: A

survey and benchmark

IEEE Transactions on Pattern Analysis and Machine Intelligence

(TPAMI), 2024

We comprehensively review three basic lines of research: generalization, robustness, and fairness, by introducing their respective background concepts, task settings, and main challenges. We also offer a detailed overview of representative literature on both methods and datasets. We further benchmark the reviewed methods on several well-known datasets. Finally, we point out several open issues in this field and suggest opportunities for further research.

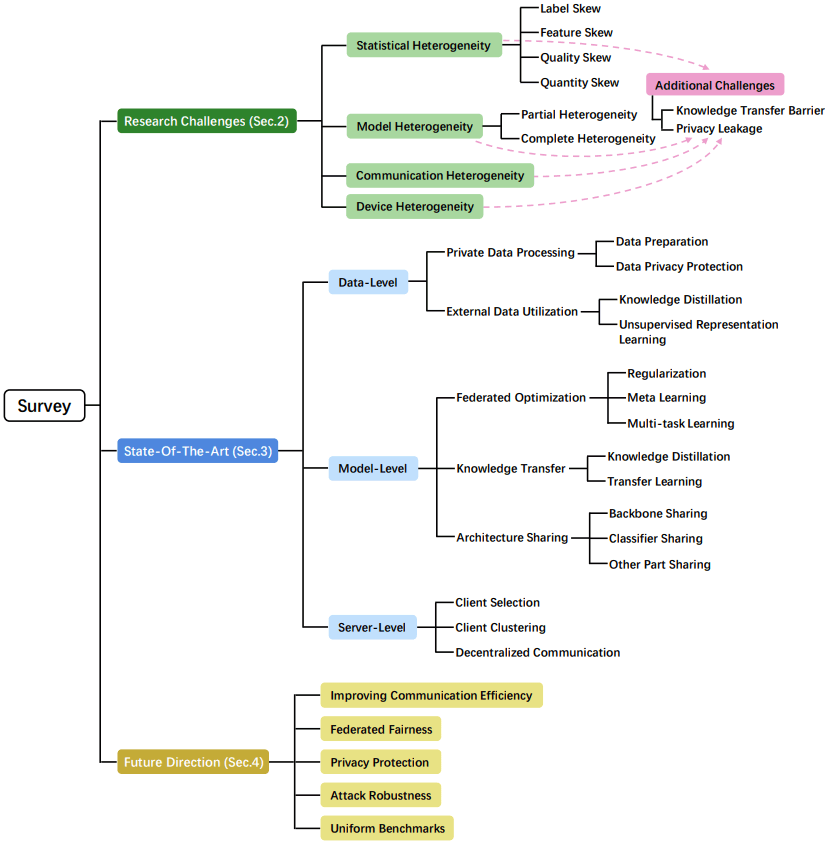

Heterogeneous Federated Learning: State-of-the-art and Research

Challenges

ACM Computing Surveys, 2023.

We firstly summarize the various research challenges in HFL from five aspects: statistical heterogeneity, model heterogeneity, communication heterogeneity, device heterogeneity, and additional challenges. In addition, recent advances in HFL are reviewed and a new taxonomy of existing HFL methods is proposed with an in-depth analysis of their pros and cons. We classify existing methods from three different levels according to the HFL procedure: data-level, model-level, and server-level. Finally, several critical and promising future research directions in HFL are discussed, which may facilitate further developments in this field.

Highlight: Federated Learning Security

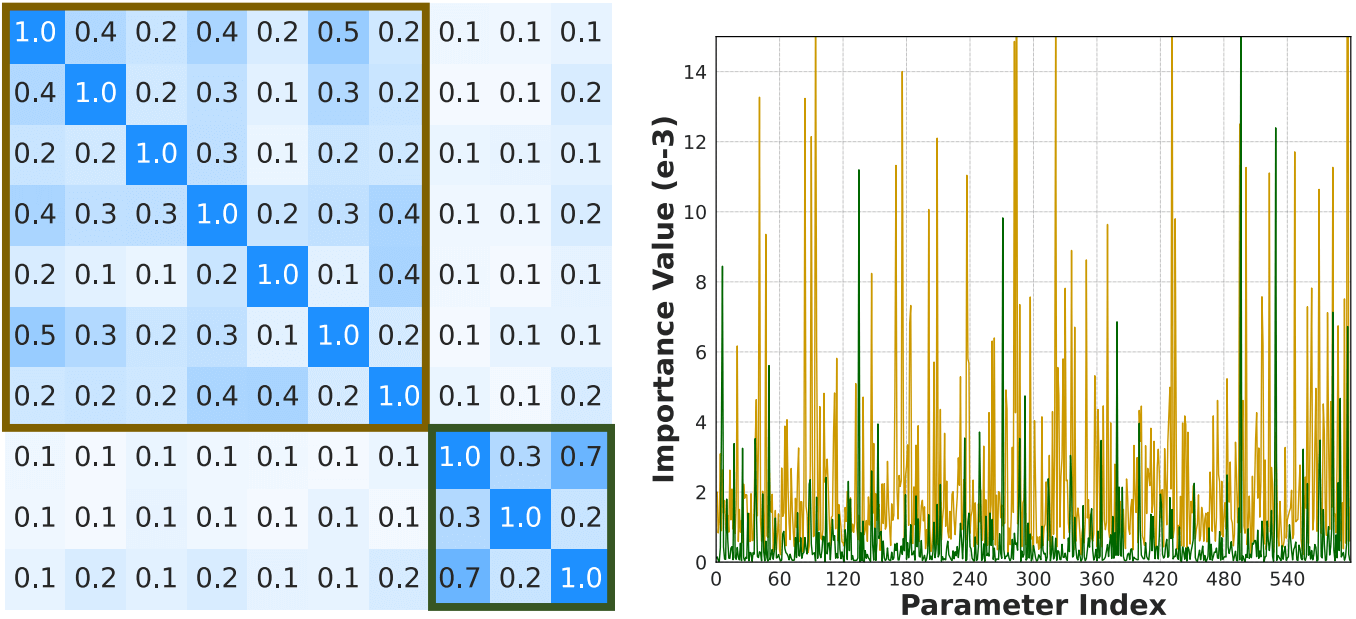

Parameter Disparities Dissection for Backdoor Defense in

Heterogeneous Federated Learning

Thirty-seventh Conference on Neural Information Processing Systems

(NeurIPS), 2024.

We introduce the Fisher Discrepancy Cluster and Rescale (FDCR) method, which utilizes Fisher Information to calculate the degree of parameter importance for local distributions. This allows us to reweight client parameter updates and identify those with large discrepancies as backdoor attackers. Furthermore, we prioritize rescaling important parameters to expedite adaptation to the target distribution, encouraging significant elements to contribute more while diminishing the influence of trivial ones. This approach enables FDCR to handle backdoor attacks in heterogeneous federated learning environments.

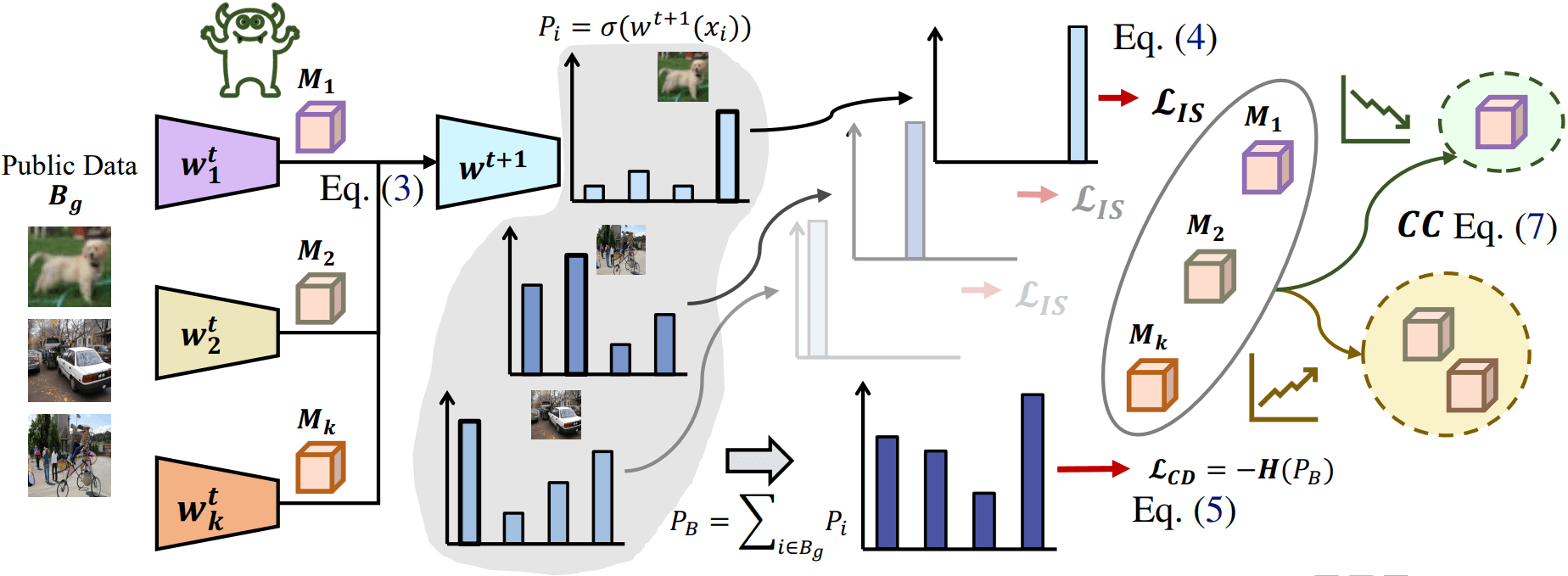

Self-Driven Entropy Aggregation for Byzantine-Robust

Heterogeneous Federated Learning

International Conference on Machine Learning (ICML), 2024.

We propose Self-Driven Entropy Aggregation (SDEA), which leverages the random public dataset to conduct Byzantine-robust aggregation in heterogeneous federated learning. For Byzantine attackers, we observe that benign ones typically present more confident (sharper) predictions than evils on the public dataset. Thus, we highlight benign clients by introducing learnable aggregation weight to minimize the instanceprediction entropy of the global model on the random public dataset. Besides, with inherent data heterogeneity, we reveal that it brings heterogeneous sharpness. Specifically, clients are optimized under distinct distribution and thus present fruitful predictive preferences. The learnable aggregation weight blindly allocates high attention to limited ones for sharper predictions, resulting in a biased global model. To alleviate this problem, we encourage the global model to offer diverse predictions via batch-prediction entropy maximization and conduct clustering to equally divide honest weights to accommodate different tendencies. This endows SDEA to detect Byzantine attackers in heterogeneous federated learning.

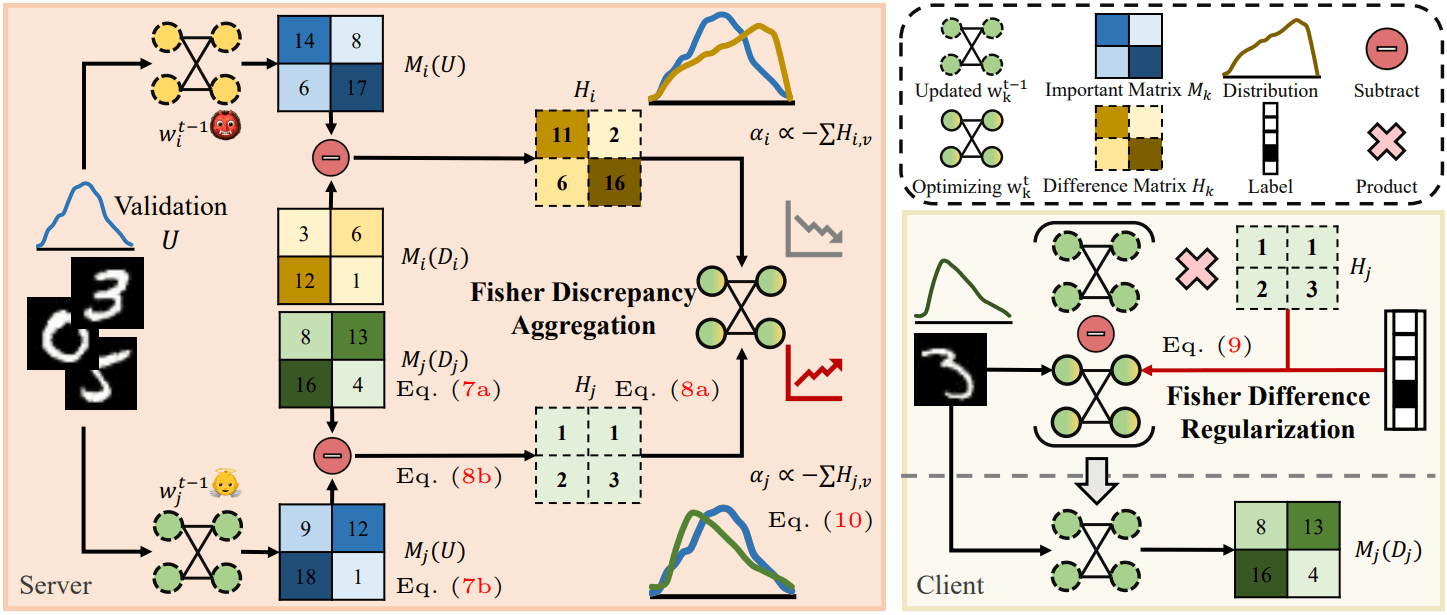

Fisher Calibration for Backdoor-Robust Heterogeneous Federated

Learning

European Conference on Computer Vision (ECCV), 2024.

We propose the Self-Driven Fisher Calibration (SDFC), which utilizes the Fisher Information to calculate the parameter importance degree for the local agnostic and global validation distribution and regulate those elements with large important differences. Furthermore, we allocate high aggregation weight for clients with relatively small overall parameter differences, which encourages clients with close local distribution to the global distribution, to contribute more to the federation. This endows SDFC to handle backdoor attackers in heterogeneous federated learning.

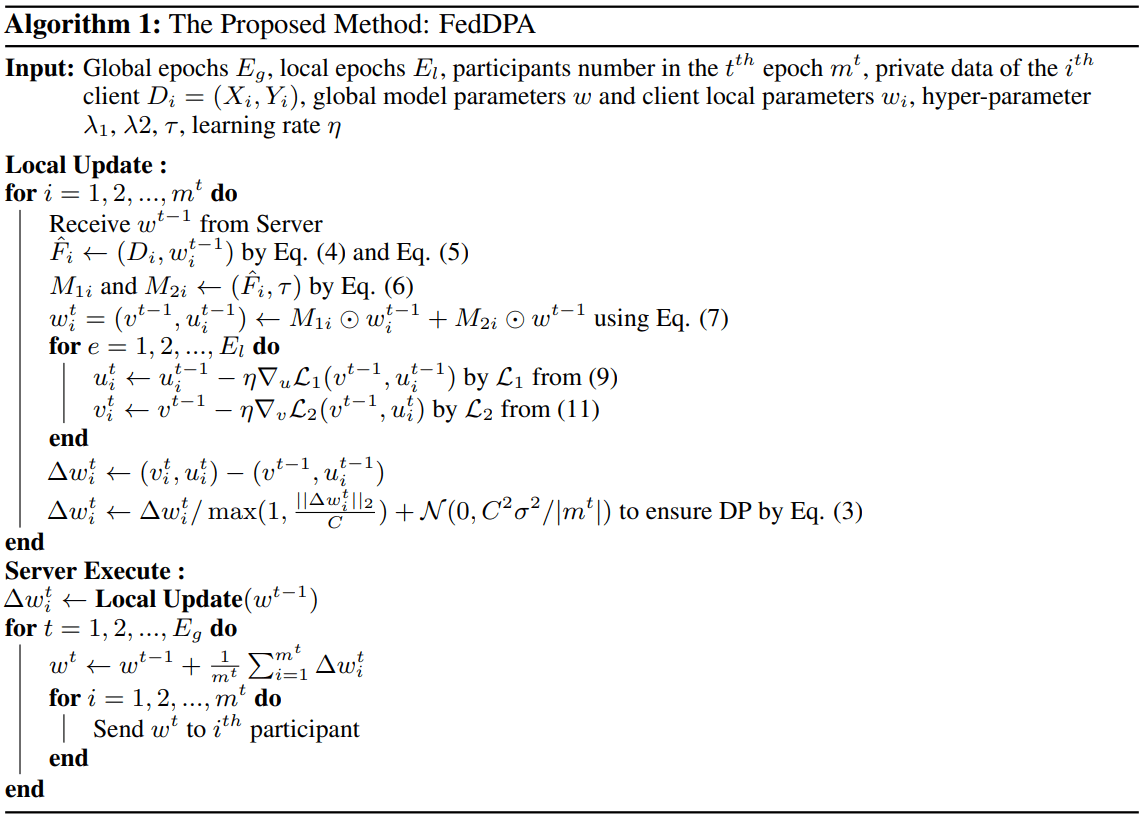

Dynamic Personalized Federated Learning with Adaptive

Differential Privacy

Thirty-seventh Conference on Neural Information Processing Systems

(NeurIPS), 2023.

We propose a novel federated learning method with Dynamic Fisher Personalization and Adaptive Constraint (FedDPA) to handle these challenges. Firstly, by using layer-wise Fisher information to measure the information content of local parameters, we retain local parameters with high Fisher values during the personalization process, which are considered informative, simultaneously prevent these parameters from noise perturbation. Secondly, we introduce an adaptive approach by applying differential constraint strategies to personalized parameters and shared parameters identified in the previous for better convergence.

Highlight: Generalization and Robustness

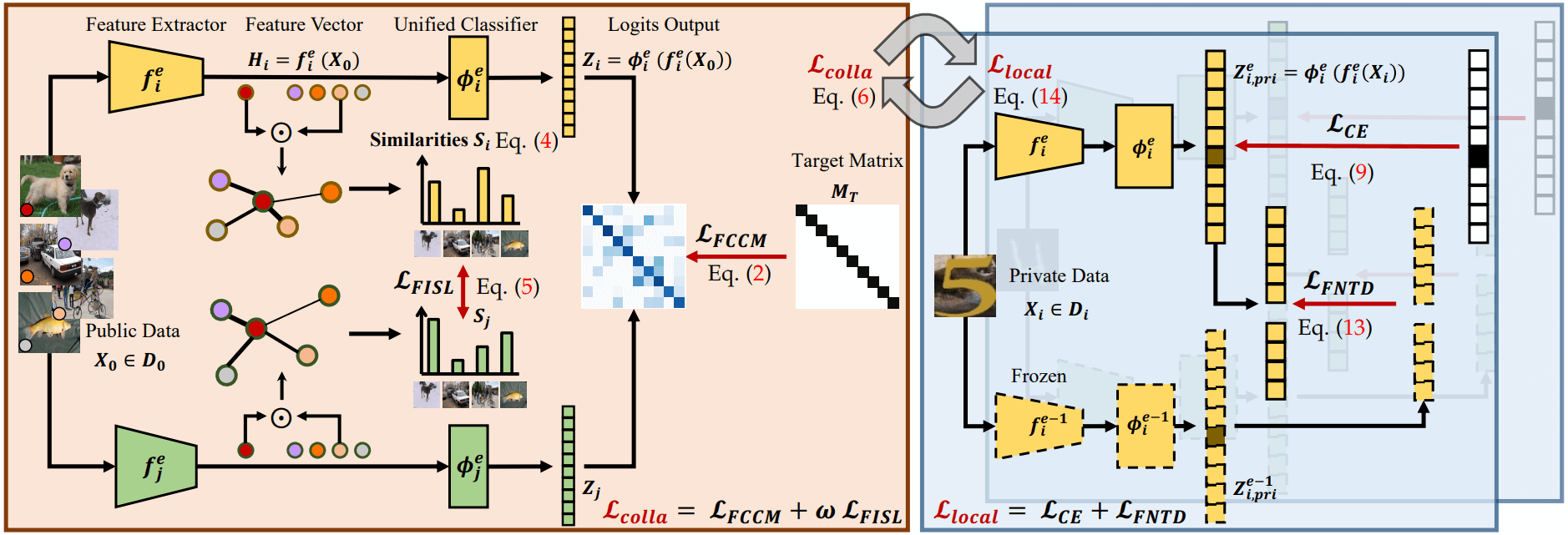

Generalizable Heterogeneous Federated Cross-Correlation and

Instance Similarity Learning

IEEE Transactions on Pattern Analysis & Machine Intelligence,

2023.

We presents a novel FCCL+, federated correlation and similarity learning with nontarget distillation, facilitating the both intra-domain discriminability and inter-domain generalization. For heterogeneity issue, we leverage irrelevant unlabeled public data for communication between the heterogeneous participants. We construct cross-correlation matrix and align instance similarity distribution on both logits and feature levels, which effectively overcomes the communication barrier and improves the generalizable ability. For catastrophic forgetting in local updating stage, FCCL+ introduces Federated Non Target Distillation, which retains inter-domain knowledge while avoiding the optimization conflict issue, fulling distilling privileged inter-domain information through depicting posterior classes relation. Considering that there is no standard benchmark for evaluating existing heterogeneous federated learning under the same setting, we present a comprehensive benchmark with extensive representative methods under four domain shift scenarios, supporting both heterogeneous and homogeneous federated settings.

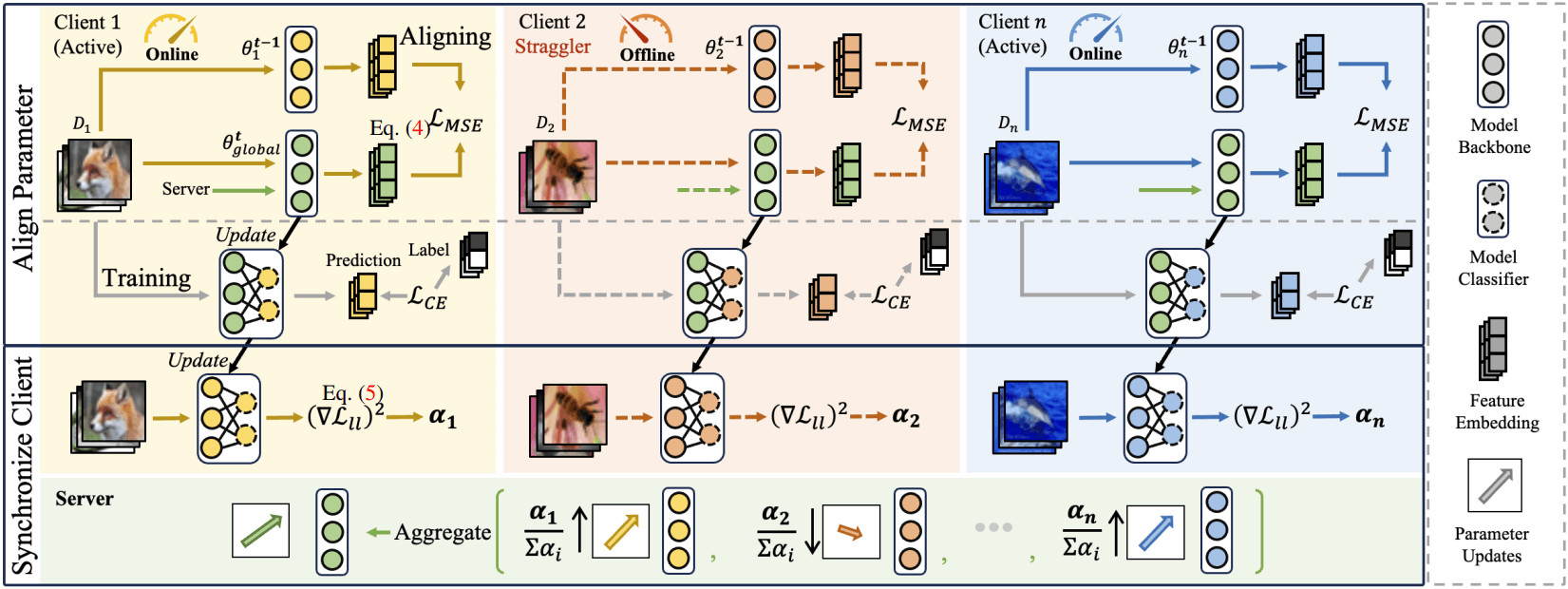

FedAS: Bridging Inconsistency in Personalized Federated

Learning

Proceedings of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR), 2024.

We present a novel PFL framework named FedAS, which uses Federated Parameter-Alignment and Client-Synchronization to overcome above challenges. Initially, we enhance the localization of global parameters by infusing them with local insights. We make the shared parts learn from previous model, thereby increasing their local relevance and reducing the impact of parameter inconsistency. Furthermore, we design a robust aggregation method to mitigate the impact of stragglers by preventing the incorporation of their under-trained knowledge into aggregated model.

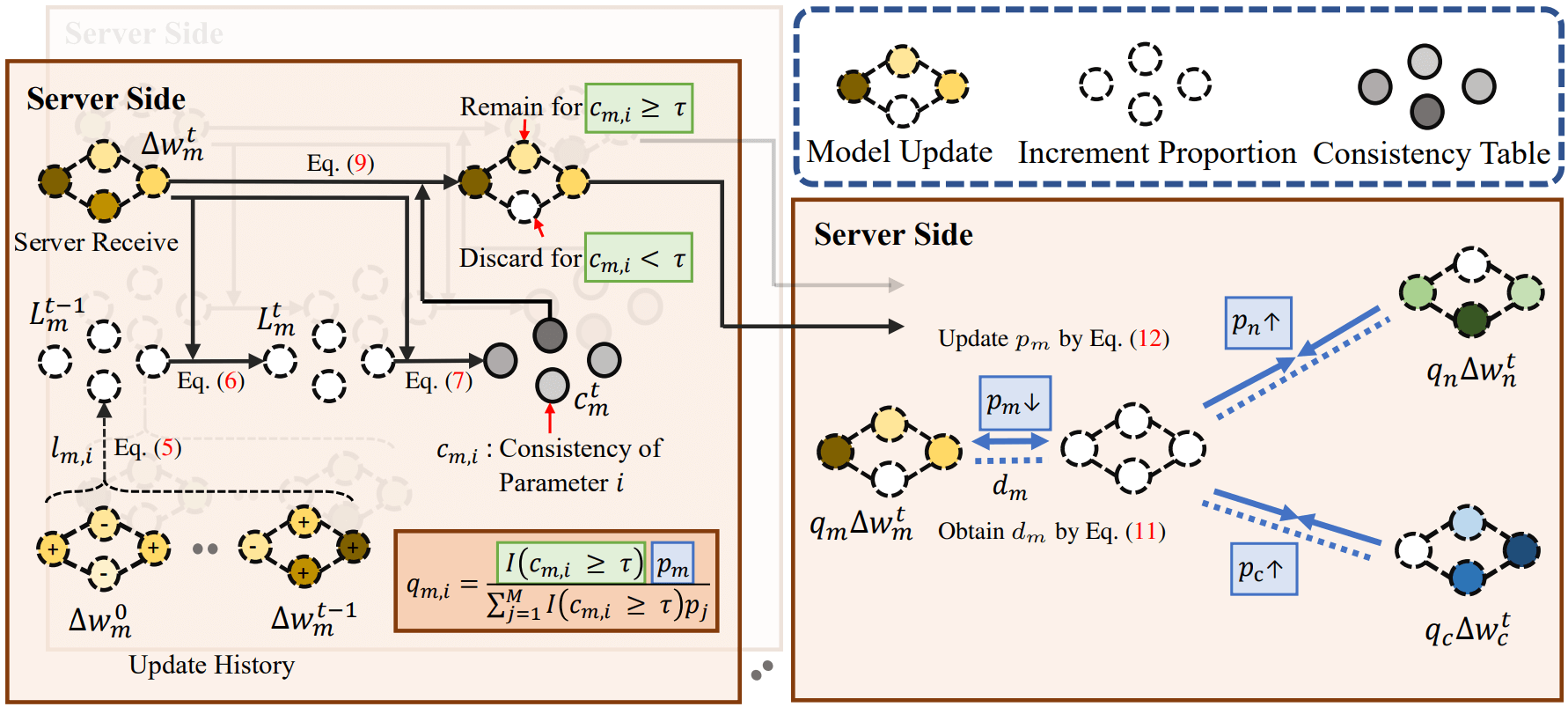

Fair Federated Learning under Domain Skew with Local Consistency

and Domain Diversity

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern

Recognition (CVPR), 2024.

We selectively discard unimportant parameter updates to prevent updates from clients with lower performance overwhelmed by unimportant parameters, resulting in fairer generalization performance. Second, we propose a fair aggregation objective to prevent global model bias towards some domains, ensuring that the global model continuously aligns with an unbiased model.

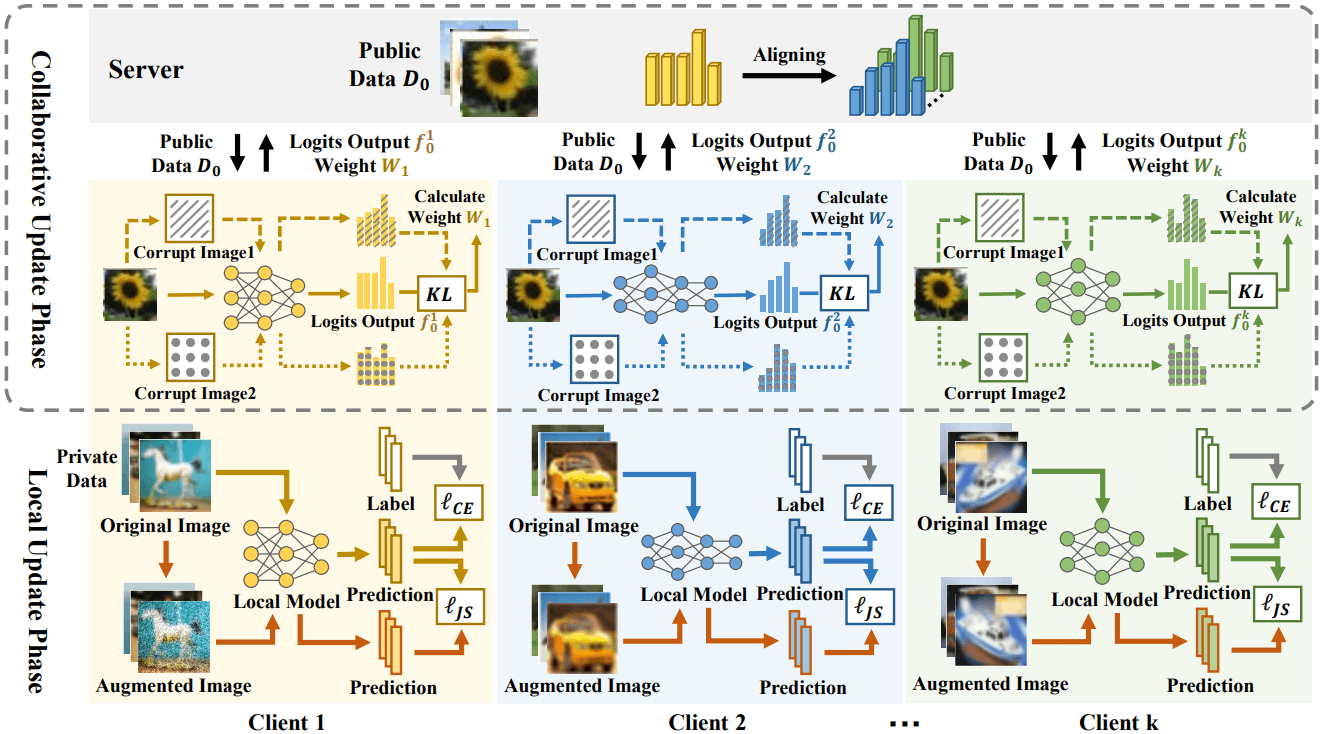

Robust Heterogeneous Federated Learning under Data

Corruption

International Conference on Computer Vision (ICCV), 2023.

We design a novel method named Augmented Heterogeneous Federated Learning (AugHFL), which consists of two stages: (1) In the local update stage, a corruption-robust data augmentation strategy is adopted to minimize the adverse effects of local corruption while enabling the models to learn rich local knowledge. (2) In the collaborative update stage, we design a robust re-weighted communication approach, which implements communication between heterogeneous models while mitigating corrupted knowledge transfer from others.

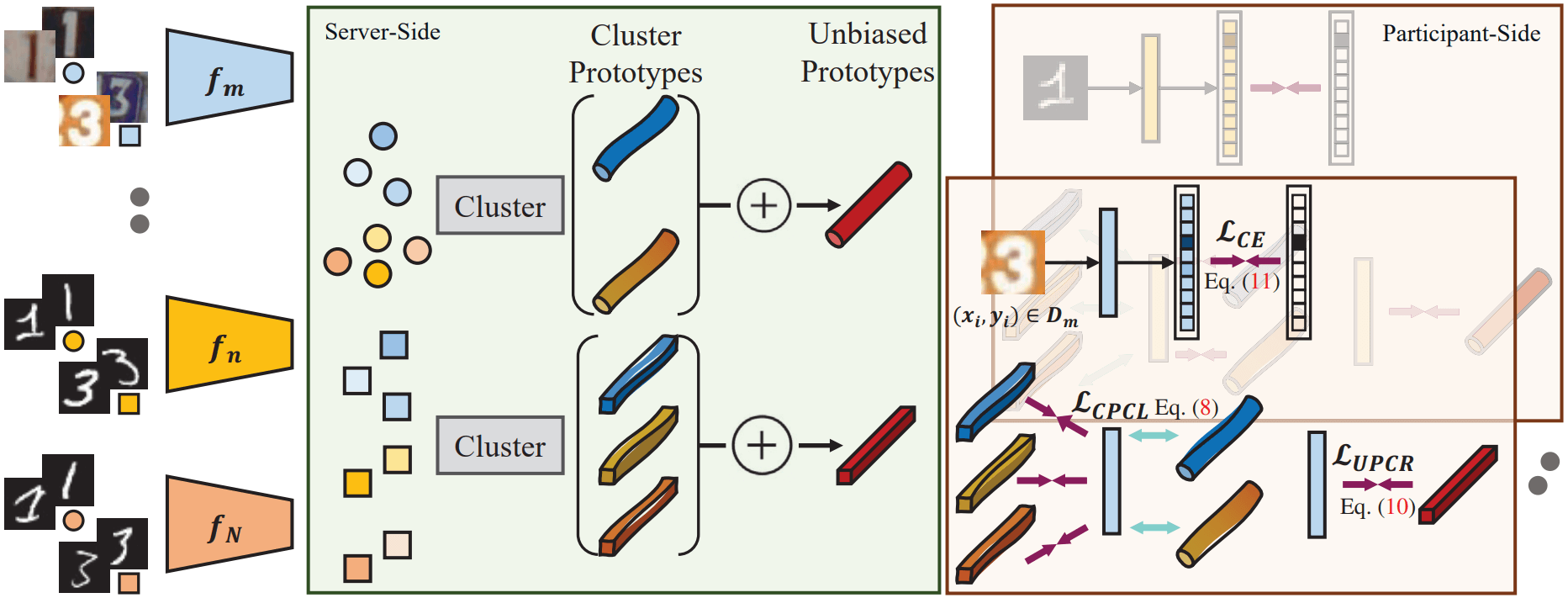

Rethinking Federated Learning With Domain Shift: A Prototype

View

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern

Recognition (CVPR), 2023.

We propose Federated Prototypes Learning (FPL) for federated learning under domain shift. The core idea is to construct cluster prototypes and unbiased prototypes, providing fruitful domain knowledge and a fair convergent target. On the one hand, we pull the sample embedding closer to cluster prototypes belonging to the same semantics than cluster prototypes from distinct classes. On the other hand, we introduce consistency regularization to align the local instance with the respective unbiased prototype.

Robust Federated Learning With Noisy and Heterogeneous

Clients

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern

Recognition (CVPR), 2022.

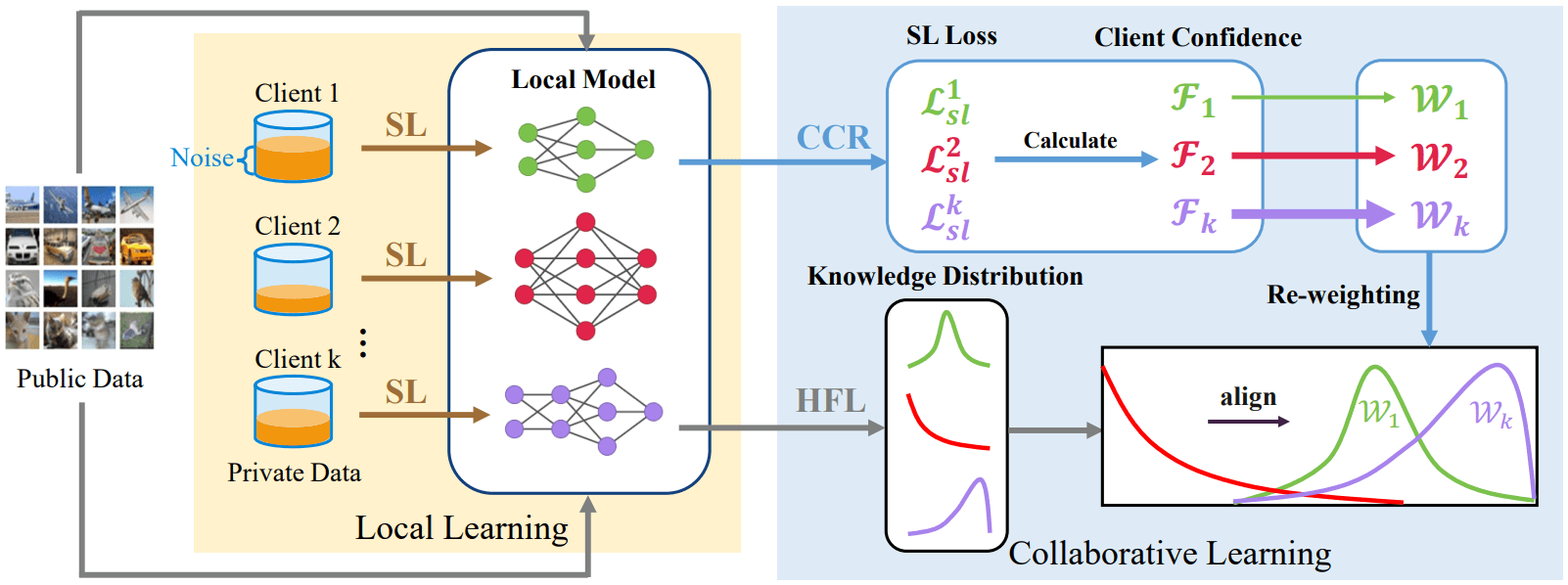

We present a novel solution RHFL (Robust Heterogeneous Federated Learning), which simultaneously handles the label noise and performs federated learning in a single framework. It is featured in three aspects: (1) For the communication between heterogeneous models, we directly align the models feedback by utilizing public data, which does not require additional shared global models for collaboration. (2) For internal label noise, we apply a robust noise-tolerant loss function to reduce the negative effects. (3) For challenging noisy feedback from other participants, we design a novel client confidence re-weighting scheme, which adaptively assigns corresponding weights to each client in the collaborative learning stage.

Highlight: Federated Graph Learning

FedSSP: Federated Graph Learning with Spectral Knowledge and

Personalized Preference

Thirty-seventh Conference on Neural Information Processing Systems

(NeurIPS), 2024.

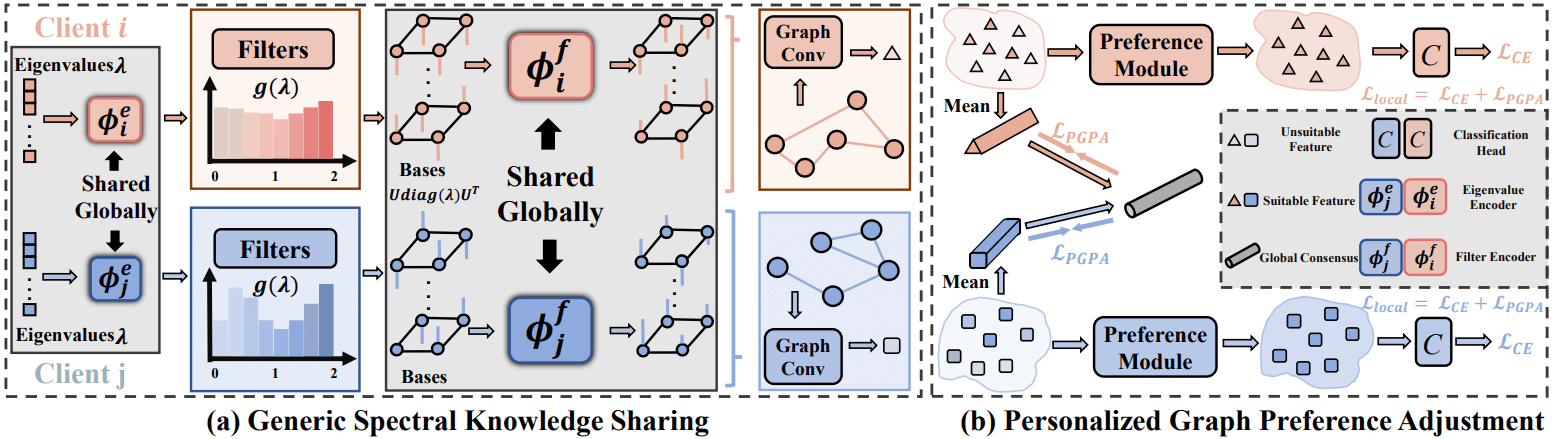

We innovatively reveal that inherent domain structural shift can be well reflected by the spectral nature of graphs. Correspondingly, our method overcomes it by sharing generic spectral knowledge. Moreover, we indicate the biased message-passing schemes for graph structures and propose the personalized preference module. Combining both strategies for effective global collaboration and personalized local application, we propose our pFGL framework FedSSP which Shares generic Spectral knowledge while satisfying graph Preferences.

Federated Graph Learning under Domain Shift with Generalizable

Prototypes

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI),

2024.

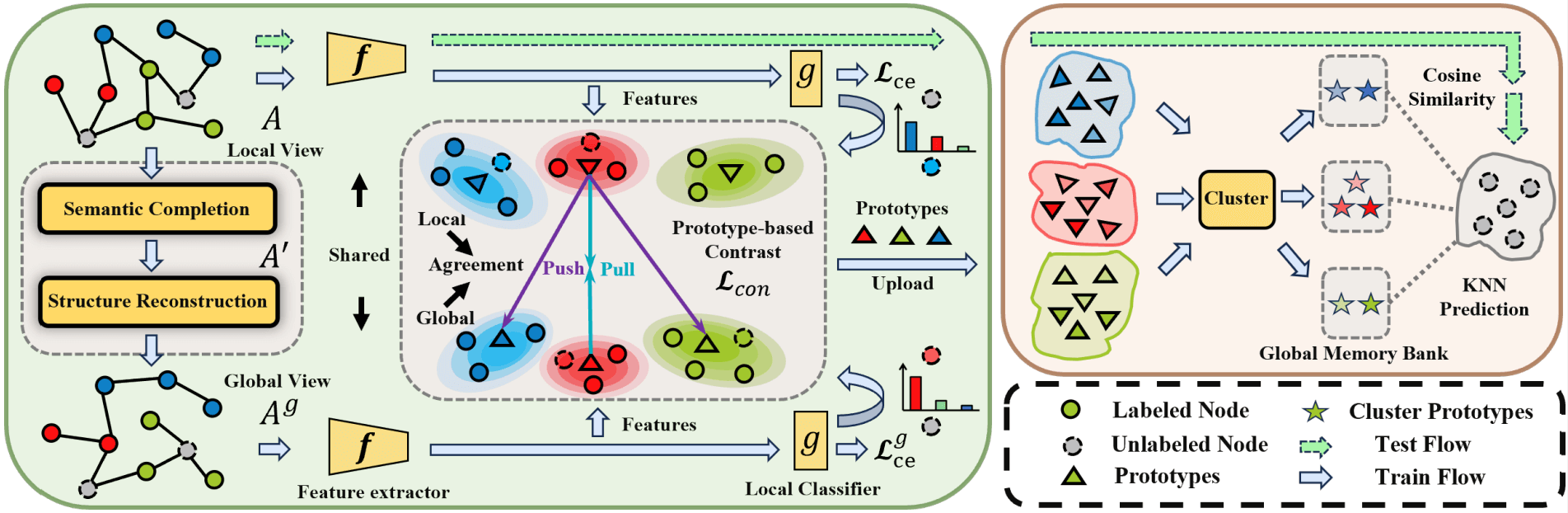

We propose a novel framework called Federated Graph Learning with Generalizable Prototypes (FGGP). It decouples the global model into two levels and bridges them via prototypes. These prototypes, which are semantic centers derived from the feature extractor, can provide valuable classifcation information. At the classifcation model level, we innovatively eschew the traditional classifers, then instead leverage clustered prototypes to capture fruitful domain information and enhance the discriminative capability of the classes, improving the performance of multi-domain predictions. Furthermore, at the feature extractor level, we go beyond traditional approaches by implicitly injecting distinct global knowledge and employing contrastive learning to obtain more powerful prototypes while enhancing the feature extractor generalization ability.

Federated Graph Semantic and Structural Learning

International Joint Conference on Artificial Intelligence (IJCAI),

2023.

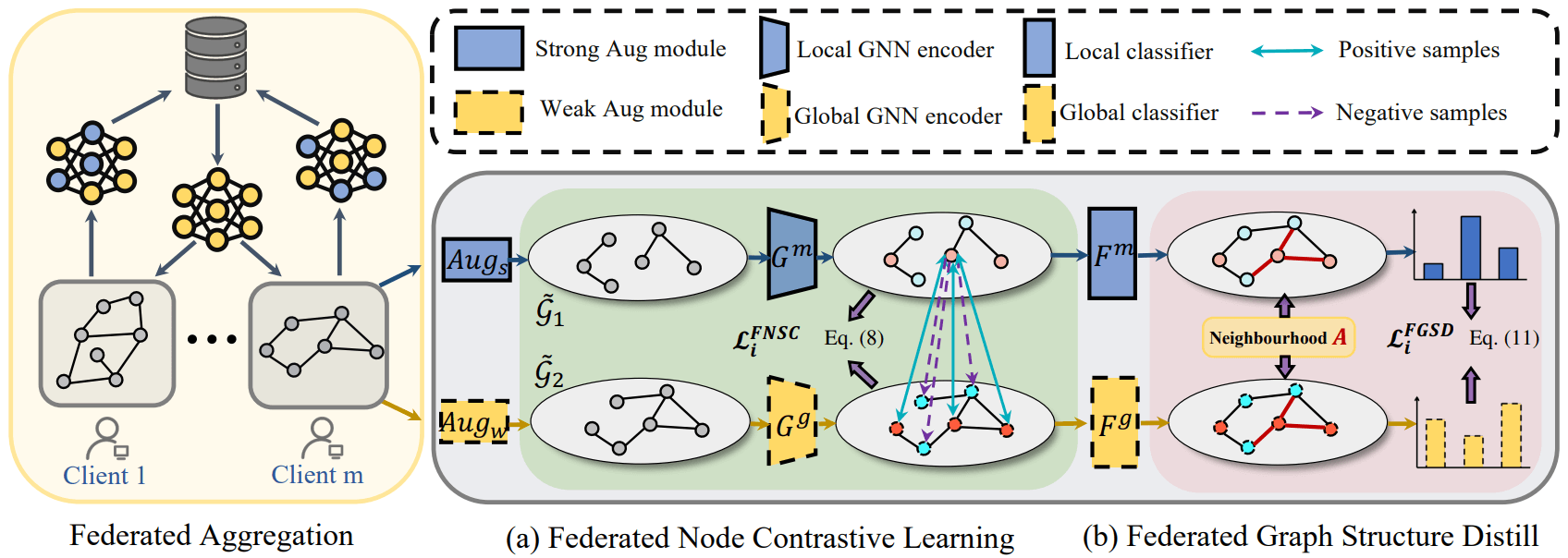

We introduce a novel federated graph learning (FGSSL) frame for both node and graph-level calibration. The former Federated Node Semantic Contrast calibrates local node semantics with the assistance of the global model without compromising privacy. The latter Federated Graph Structure Distillation transforms the adjacency relationships from the global model to the local model, fully reinforcing the graph representation with aggregated relation.